Liu M Y, Huang X, Mallya A, et al. Few-shot unsupervised image-to-image translation[J]. arXiv preprint arXiv:1905.01723, 2019.

1. Overview

1.1. Motivation

1) Existing translation methods require access to many images in both source and target classes at training time.

In this paper, it proposes a few-shot unsupervised image-to-image translation (FUNIT) algorithm.

1) Works on previously unseen target clasess.

2) For a certain class, average a set of $K$ samples.

3) Exploits AdaIN as Discriminator.

2. FUNIT

2.1. Definition

$\bar{x} = G(x, \lbrace y_1, …, y_K \rbrace); c_x,c_y \in S, c_x \ne c_y$

1) A content image $x \in$ class $c_x$.

2) A set of $K$ class images $\lbrace y_1, …, y_K \rbrace \in$ class $c_y$.

3) During training, $c_x,c_y$ are randomly sampled.

4) During testing, $c \in T$.

2.2. Details

$\bar{x} = F_x(z_x, z_y) = F_x(E_x(x), E_y(\lbrace y_1, …, y_K \rbrace))$

1) Content Encoder $E_c$ to extract local structure (pose).

2) Class Encoder $E_y$ to extract global look (obj appearance). First map $K$ samples to vector, then average them.

3) Decoder $F_x$ exploits AdaIN.

4) The generation capability depends on the number of source object classes during training.

5) Discriminator $D$ output $|S|$ length vector. (For $x$ only focus on $c_x$th element. For $\bar{x}$ only focus on $c_y$th element.).

2.3. Loss Function

$min_D max_G L_{GAN} (D, G) + \lambda_R L_R (G) + \lambda_F L_{FM} (G)$

1) GAN Loss

$L_{GAN}(G, D) = E_x [-log D^{c_x}(x)] + E_{x, \lbrace y_1, …, y_K \rbrace}[ log (1 - D^{c_y}(\bar{x})) ]$

2) Content Reconstruction Loss ($K=1$ with the same image)

$L_R(G) = E_x[|| x - G(x, \lbrace x \rbrace) ||_1^1 ]$

3) Feature Matching Loss ($D_f$ feature extractor)

$L_F(G) = E_{x, \lbrace y_1, …, y_K \rbrace} [|| D_f(\bar{x}) - \Sigma_k \frac{D_f(y_k)}{K} ||_1^1] $

3. Experiments

3.1. Details

1) $\lambda_R = 0.1, \lambda_F = 1$.

2) Dataset: Animal Faces, Birds, Flowers, Foods.

3) Metric: Translation Accuracy, Content Preservation (DIPD), Photorealism (Inception Score) and Distribution Matching (FID).

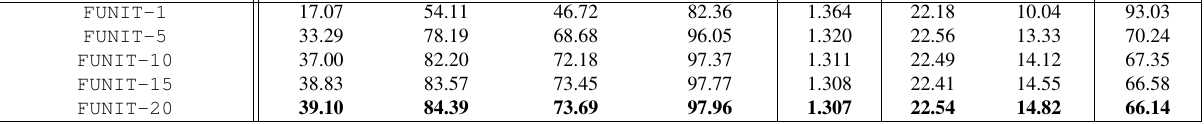

3.2. Ablation Study

1) At test time, larger $K$ makes improvement. (FUNIT-K)

3.3. Visualization